Artificial Worldviews

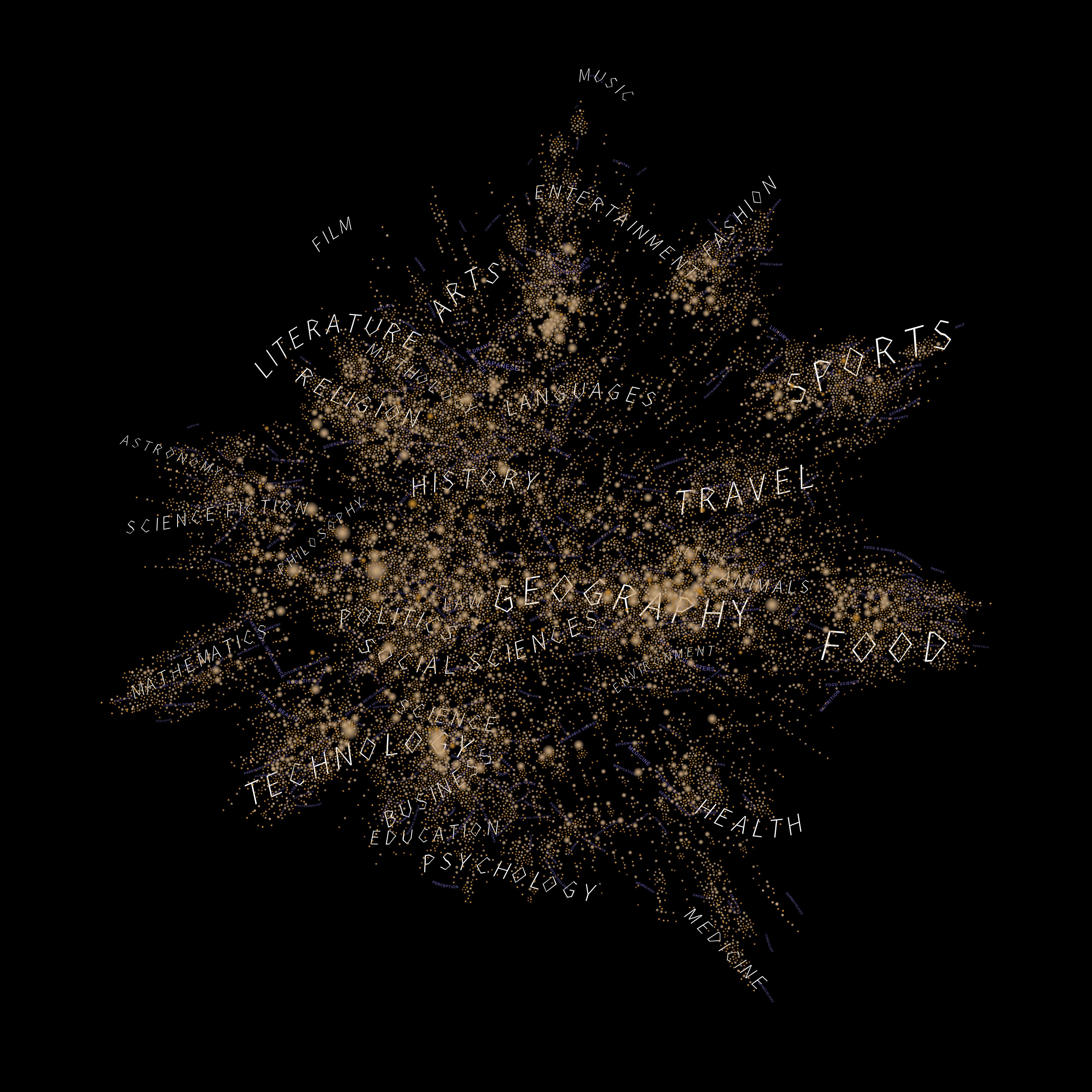

How does “prompting” shape the way we experience the world? Artificial Worldviews interrogated GPT-3.5 about its knowledge of the world in 1,764 prompts and mapped out the results.- Time

- –

- Location

- Virtual

The advent of Large Language Models (LLMs) has revolutionized natural language processing and understanding. Over the past years, these models have achieved remarkable success in various language-related tasks, a feat that was unthinkable before. After its launch, ChatGPT quickly became the fastest-growing app in the history of web applications. But as these systems become common tools for generating content or finding information—from research and business to greeting cards—it is crucial to investigate the worldviews of these systems. Every media revolution changes how humans relate to one another; LLMs will have a vast impact on human communication. How will systems such as ChatGPT influence the ideas, concepts, and writing styles over the next decade?

To grasp the situation our research methodically requested data from the underlying API of ChatGPT about its own knowledge. The first prompt is the following:

“Create a dataset in table format about the categories of all the knowledge you have.”

From this initial prompt, a recursive algorithm requested data about fields of knowledge, their subfields, and the humans, objects, places, and artifacts within these categorical systems. The generated data does not represent an unbiased picture of the knowledge inherent in GPT-3. Instead, it is a confluence of three forces: first, a representation of how the LLM handles the request; second, a perspective on the underlying textual training data; and third, a reflection of the political sets and settings embedded within the artificial neural network.

Our research questions are manifold and prompt a deeper inquiry into the nature of artificial intelligence. First, we find ourselves intrigued by the possibility of probing this novel method as a means to understand AI systems. What can we learn from the iterative and methodical requesting of data from large language models? Is it a mirror reflecting our human intellect or an entity with its own inherent logic? Second, we are interested in questions that pertain to the dataset itself: What are the biases of the system? Are there fields that stand overrepresented or underrepresented, and what does that signify about our collective online text corpus? How diverse will the dataset be, and what can that diversity teach us about the breadth and limitations of machine learning?

The most unforeseen finding

The returned data from GPT-3.5 is more female and more diverse than expected. From the 1.764 prompts the five most returned entries are:

- Rachel Carson (named 73 times)

- Jane Goodall (named 60 times)

- Aristotle (named 52 times)

- Wangari Maathai (named 44 times)

- Isaac Newton (named 41 times)

So far we do not know why and how a marine biologist, writer, and conservationist (Rachel Carson), the world’s foremost expert on chimpanzees (Jane Goodall) as well as a Kenyan social, environmental, and political activist (Wangari Maathai) became so central in the map. Leonardo da Vinci, Charles Darwin, Albert Einstein, Alan Turing, Elon Musk, Galileo Galilei, Karl Marx, William Shakespeare, Winston Churchill, Carl Sagan, Sigmund Freud, Mahatma Gandhi, and Nelson Mandela are all named less frequently than these three women. Might OpenAI set certain parameters that lead to these results? Are prompt engineers pushing certain perspectives to become more visible? Or does GPT-3 care a lot for the planet and the environment?