Choreographic Interface

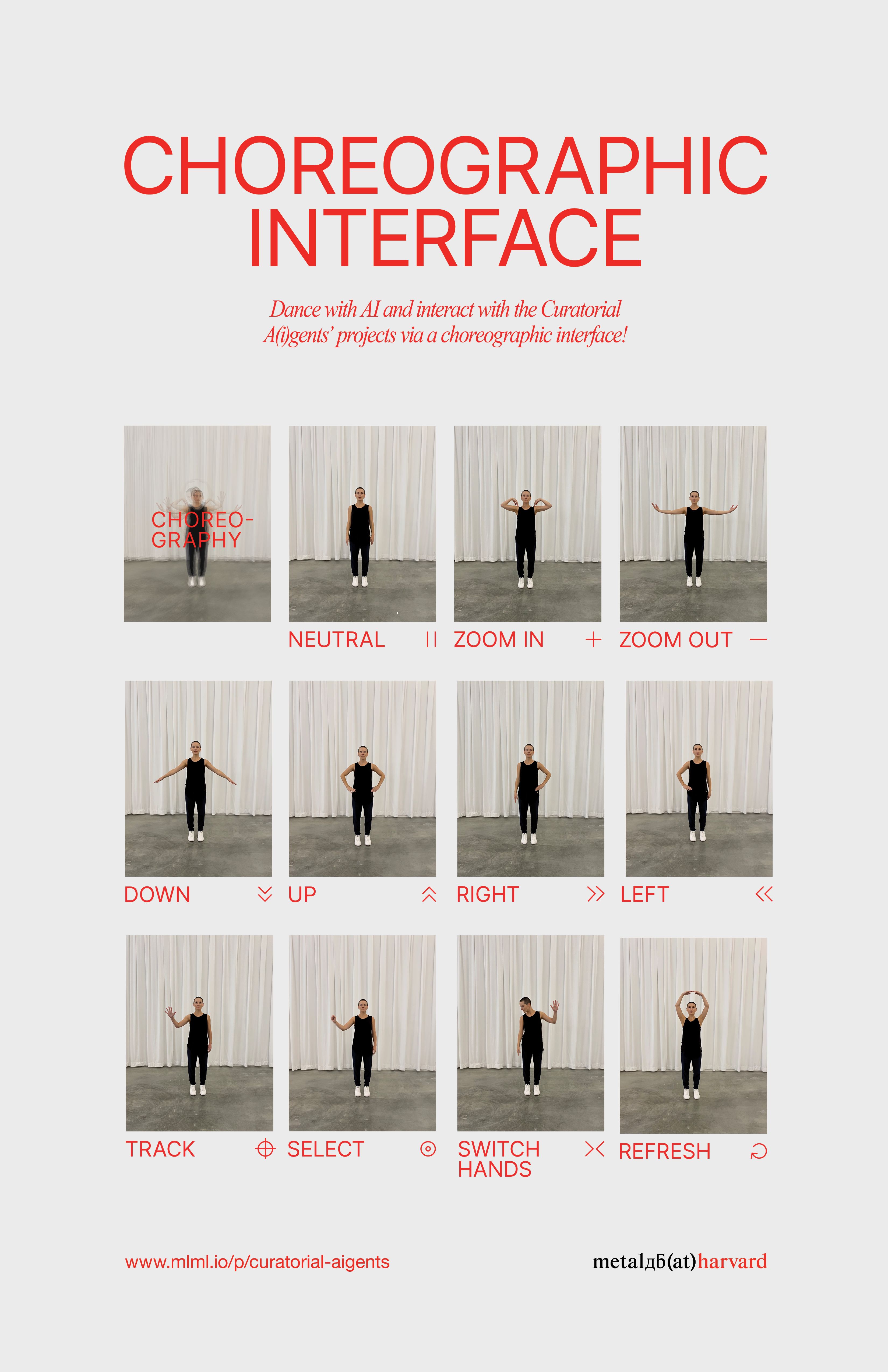

As part of Curatorial A(i)gents, we researched how a choreographic interface could enable visitors to interact with the show’s projects via a dance vocabulary- Time

- –

What is a choreographic interface and what role does dance choreography play in its design process?

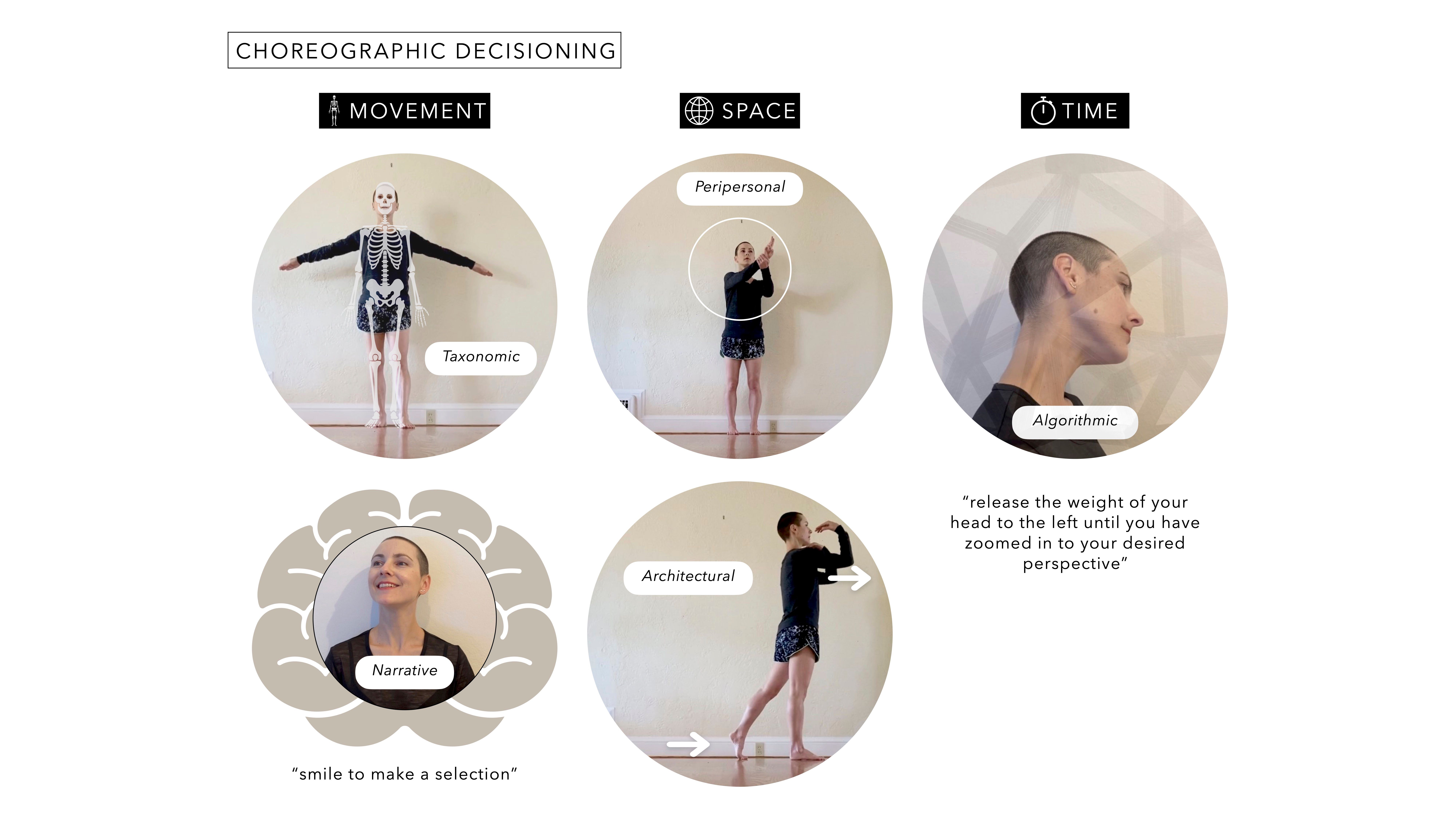

In 2019, metaLAB began work on Curatorial A(i)gents, a group exhibition presenting a series of machine-learning-based experiments with museum collections developed by members and affiliates of the lab. The show was slated to premiere the following year at the Harvard Art Museums’ Lightbox Gallery. Postponed until 2022 due to the pandemic, metaLAB assembled a team to develop a “touchless” solution for interacting with the digital projects in the show. Conceived as a choreographic interface, the team researched how a full-torso gestural vocabulary could enable visitors to move freely in the Lightbox Gallery without having to touch any shared devices.

Prototype

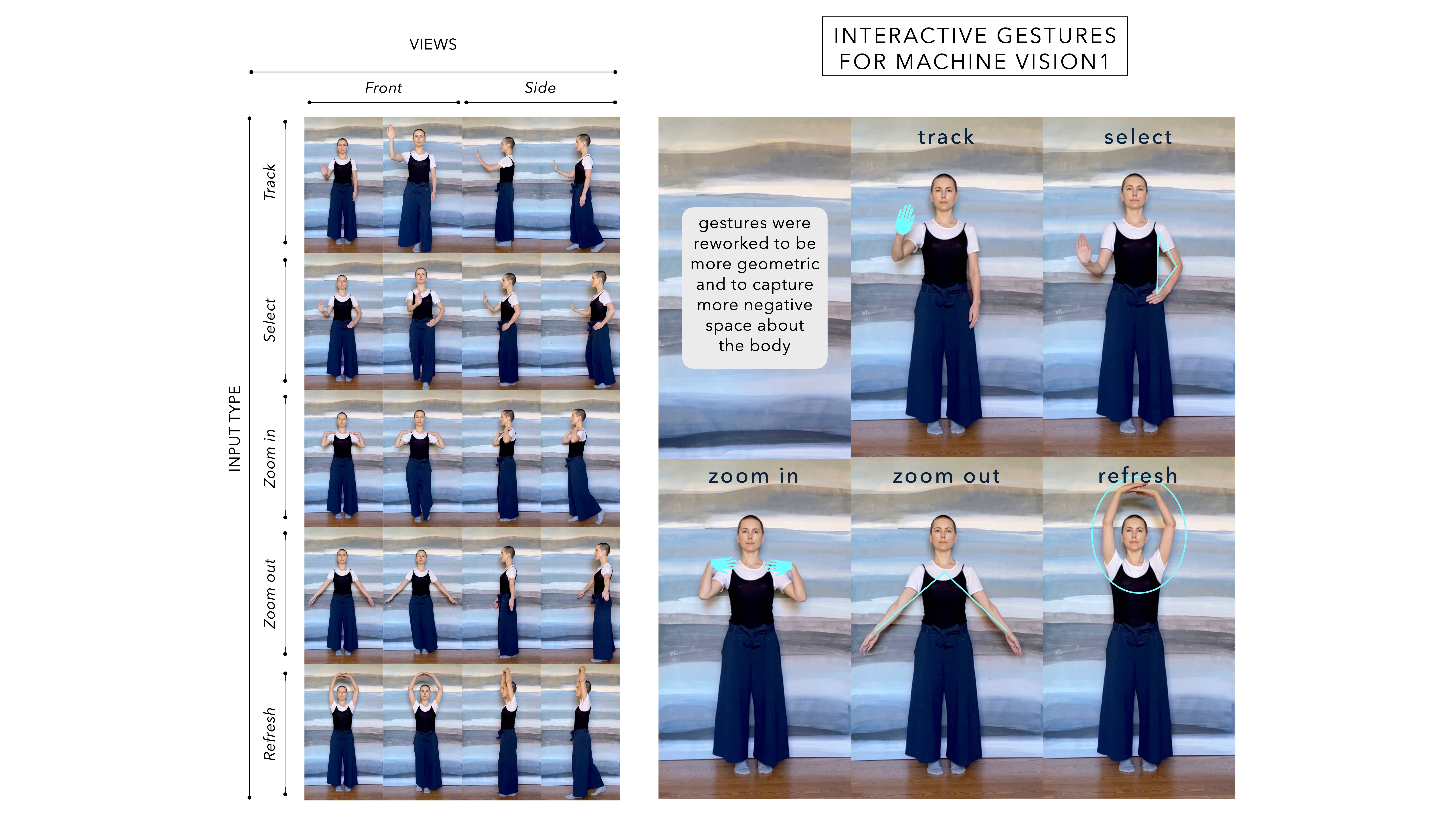

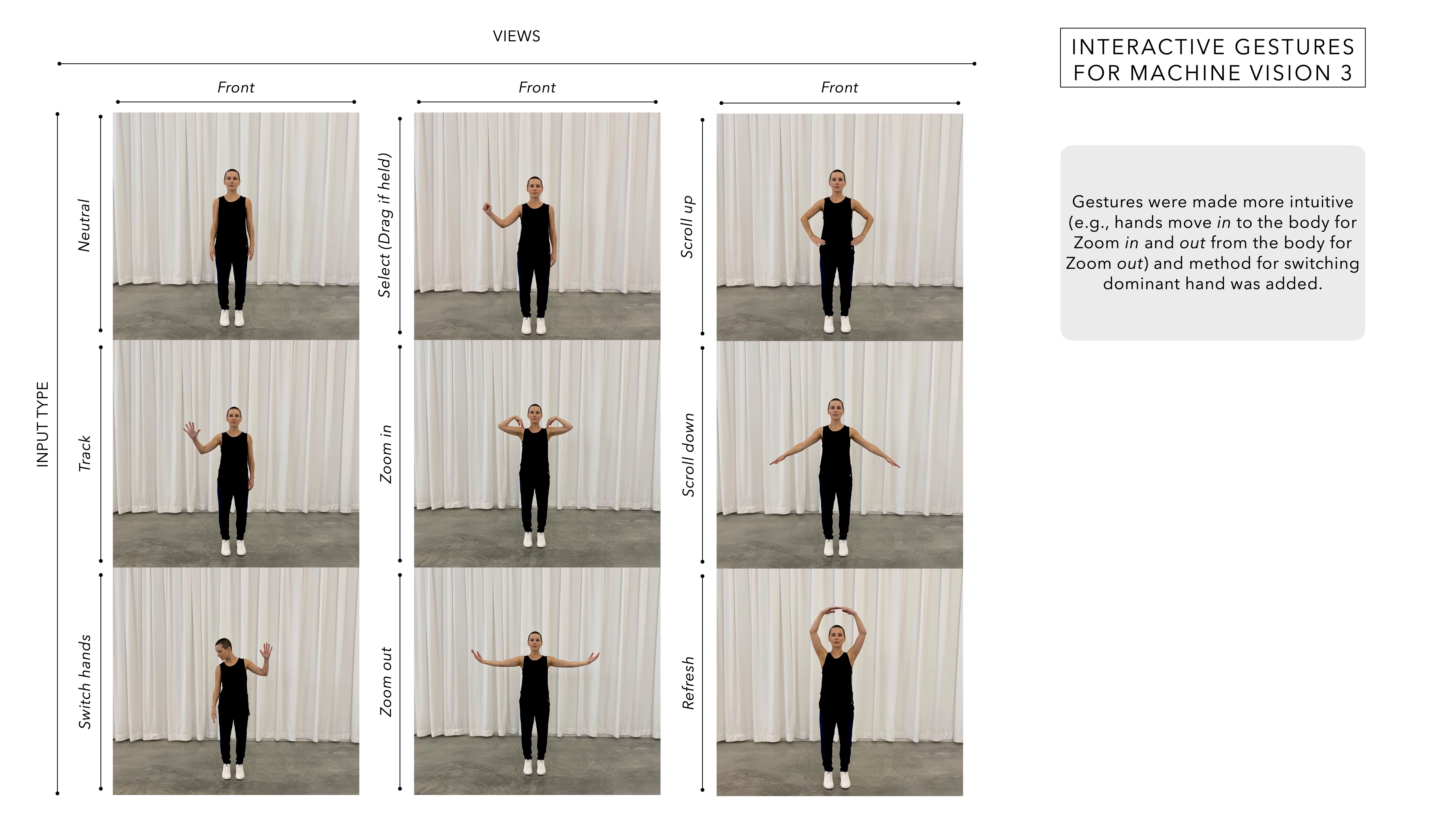

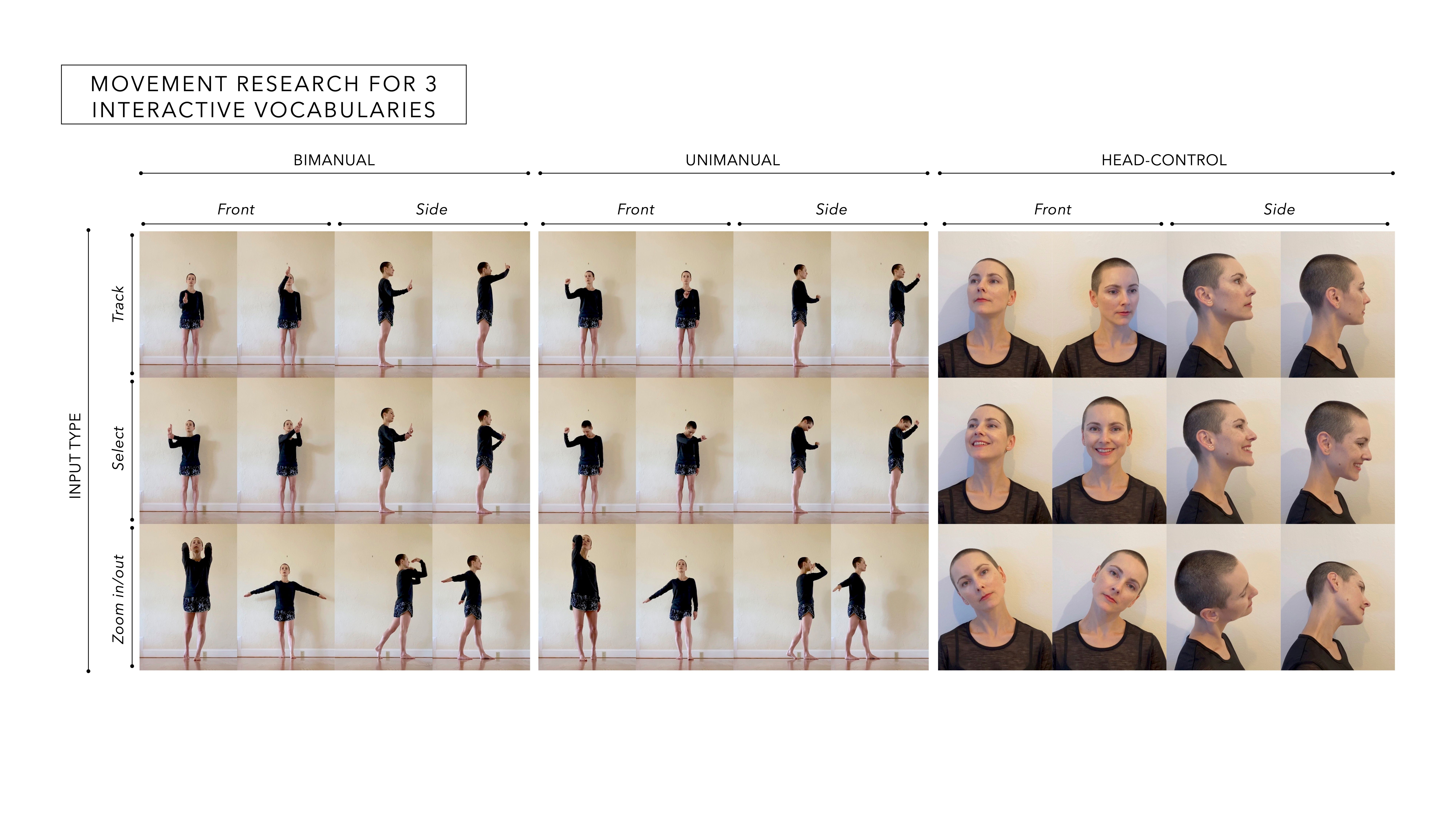

For the Harvard Art Museums prototype, Lins Derry and Jordan Kruguer began with the challenge of designing a post/pandemic “touchless” version that would allow visitors to safely interact with the Curatorial A(i)gents’ screen-based projects. This challenge led to the idea of using machine vision to mediate the user interactions. Beginning with accessibility research, potential embodied interactions were workshopped with movement and disability experts before experimenting with how machine vision and machine learning models interpret choreographed movement.

The team then built and tested the architecture for supporting a simple gestural set, including zoom in/out, scroll up/down, select, span, switch hands, and refresh. The torso-scaled gestures for performing these interactions were derived from Lins’s choreography. Maximillian Mueller then joined the team to develop a sonification score for the interactive gestures.

Below is a video showing how the interface worked with the various projects.